If we all “hang out” virtually, we make ourselves smaller.

A few days ago I watched a car drifting on its own across a sloped parking lot, motor off. There was an occupant, but he was lost to everything except the text he was writing. He was clearly headed for trouble on the other side when he finally realized that the laws of physics had put him in the path of others. I fear this is us, drifting–even while the world waits–and too preoccupied with a screen to notice.

As a case in point Brian Chen’s recent technology piece in the New York Times (December 29, 2022) eagerly described of coming advances in digital media: better iPhones, new virtual reality equipment, software that allows people to “share selfies at the same time,” and social media options that provide new “fun places to hang out.”

So glib and so short-sighted. When did a few inches of glass with microchips become a “place?” Language like this makes one wonder if, as students, these technology journalists encountered the rich expanses of social intelligence that come to life in real time. Too few technology mavens seem to give any weight to the ranges of human experience predicated on hard-won human achievements of cognition and competence. Consumer-based digital media are mostly about speed rather than light. If we all “hang out” virtually, we make ourselves smaller, using the clever equivalent of a mirror to not notice our diminished relevance.

Most social media sites only give us only the illusion of connection. This is perhaps one reason movies, sports and modern narratives are so attractive: we can at least witness people in actual “places” doing more with their lives than exercising their thumbs. Spending time with young children also a helps. In their early years children reflect our core nature by seeking direct and undivided attention; no virtual parenting, please. In expecting more than nominal indifference they may be more like their grandparents than parents.

A.I. pollutes the idea of authorship

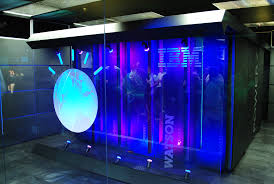

Among more changes awaited next year, Chen described a “new chatty assistant” from an A. I. firm. The software is called Chat-GPT, which can allow a nearly sentient chatbot to act as a person’s “research assistant,” or maybe generate business proposals, or even write research papers. He’s enthusiastic about how these kinds of programs will “streamline people’s work flows.” But I suspect these require us to put our minds in idle: no longer burdened with functioning as an agency of thought. Apparently the kinks to be worked out would be no more than technical, freeing a person from using complex problem-solving skills. Indeed, the “work” of a computer generated report cannot be said to come from the person at all. As with so many message assistants, A.I. pollutes the idea of authorship. Who is in charge of the resulting verbal action? Hello Hal.

Consider how much worse it is for teachers of logic, writing, grammar, vocabulary, research and rhetoric, let alone their students. All ought to be engaged in shaping minds that are disciplined, smart about sources, and able to apply their life experiences to new circumstances. It is no wonder that the increasing presence of intellectual fakery makes some college degrees nearly meaningless. Paying for an A.I.-generated college paper is bad enough; generating plans for action from a self-writing Word program is a nightmare for all of us who expect our interlocutors to be competent, conscious and moral free agents.

![]()